Google’s new Bard AI fluffs its first demo with factual blunder

Which telescope took the first picture of an exoplanet? Don't ask Bard

After Google announced its new AI chatbot Bard earlier this week, it showed off exactly what the artificial intelligence engine was capable of with a demo on Twitter. Unfortunately, things didn’t go quite as planned.

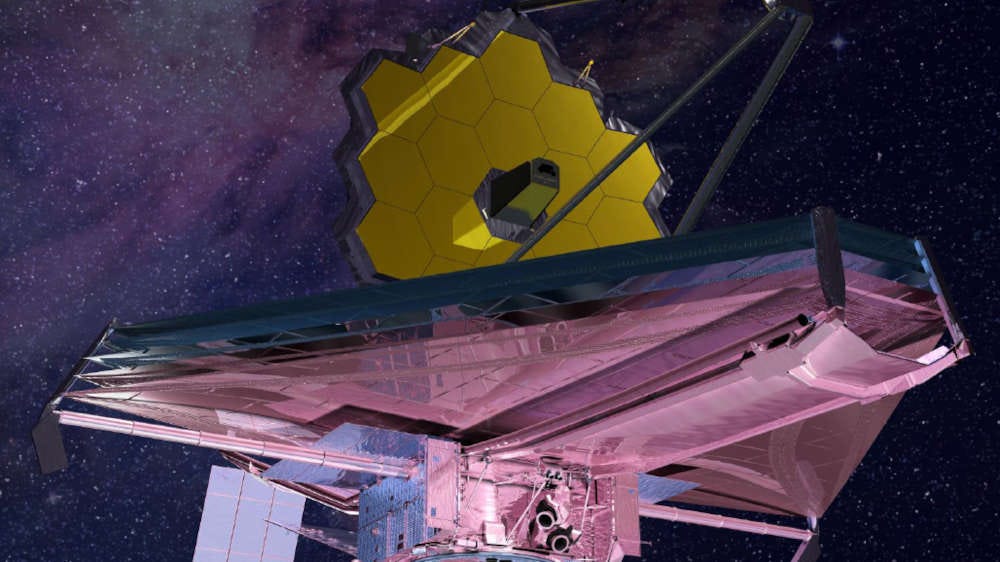

In the tweet, Bard is asked the question: What new discoveries from the James Webb Space Telescope can I tell my 9-year-old about? It serves up a list of three facts about the groundbreaking astronomical instrument, which it says will “spark a child’s imagination about the infinite wonders of the universe”.

➡️ The Shortcut Skinny: Google Bard

😬 Google’s new AI Bard hasn’t had a great start

⛔ In a promo demo, it generated a factually incorrect answer

📣 Experts were quick to point out the mistake

🤔 It raises questions about the efficacy and verification of AI-generated content

The one problem, however, is that one of the supposed facts is actually wrong. Bard says that the telescope “took the very first pictures of a planet outside of our own solar system,” but Dr Grant Tremblay, an astrophysicist at the Harvard & Smithsonian Centre for Astrophysics, pointed out that isn’t true.

“Not to be a ~well, actually~ jerk, and I'm sure Bard will be impressive, but for the record: JWST did not take ‘the very first image of a planet outside our solar system,’” he said

“The first image was instead done by Chauvin et al. (2004) with the VLT/NACO using adaptive optics,” he added, and is corroborated by NASA.

“Ironically, if you actually search ‘what is the first image of an exoplanet’ on the original Google, the old-school Google, it gives you the correct answer. And so it’s funny that Google, in rolling out their huge multibillion dollar play into this new space, didn’t fact check on their own website,” Tremblay told New Scientist.

As Tremblay went on to say on Twitter, one of the persistent problems with AI language models is their tendency to be very confidently wrong, stating false information with enough conviction that it appears true to a layman.

Verification and fact-checking will only become more important as the technology continues to be expanded upon and integrated with existing products. We’ve already seen CNET’s AI engine make basic math errors in financial explainer articles, as well as seemingly plagiarize content.

As Google poses Bard and its AI tech as a replacement for existing search engines, and Microsoft shifts Bing into AI-powered territory, important questions over how AI-generated content is fact-checked will be raised. OpenAI has already released a tool to help verify whether a text has been generated by AI (although in our experience it’s by no means accurate), but the process of factual verification might still be a task for humans.