ChatGPT-4o features: AI demos real-time conversational speech and emotion

OpenAI's spring update shows where the AI chatbot is headed, and it's pretty wild and mostly free

🤖 ChatGPT-4o is OpenAI’s latest model that’s faster and sounds more real

💻 A native ChatGPT app is coming to desktops – Mac first, Windows later

💰 It’s free (with limits), but some features will come to ChatGPT Plus first

😮 You can ask ChatGPT-4o to voice emotion and now interrupt the AI

👀 New vision capabilities can analyze on-screen and on-camera queries

💬 Real-time translation is possible using ChatGPT-4o

Update: The Shortcut can confirm that ChatGPT-4o has launched for ChatGPT Plus users paying $20/mo to OpenAI. We haven’t seen it launch for free users yet, nor have I seen the Mac app that’s supposed to launch today. Stay tuned for updates.

We just witnessed ChatGPT-4o, the latest model from OpenAI, and it’s capable of wild real-time conversational speech and conveying human-like emotion. Here’s what’s new about the new ChatGPT LLM model, launching one day before Google IO and one month before Apple’s WWDC 2024 keynote.

“Talking to a computer has never felt really natural for me; now it does,” said OpenAI CEO Sam Altman. “I can really see an exciting future where we are able to use computers to do much more than ever before.”

🏃♂️ The voice model of ChatGPT-4o offers faster answers (no more 2-3 second lag), is more responsive to feedback, and can be interrupted so you don’t have to wait your turn to inject new prompts, according to the demo by OpenAI CTO Mira Murati. Basically, you can be rude to ChatGPT-4o to advance conversations.

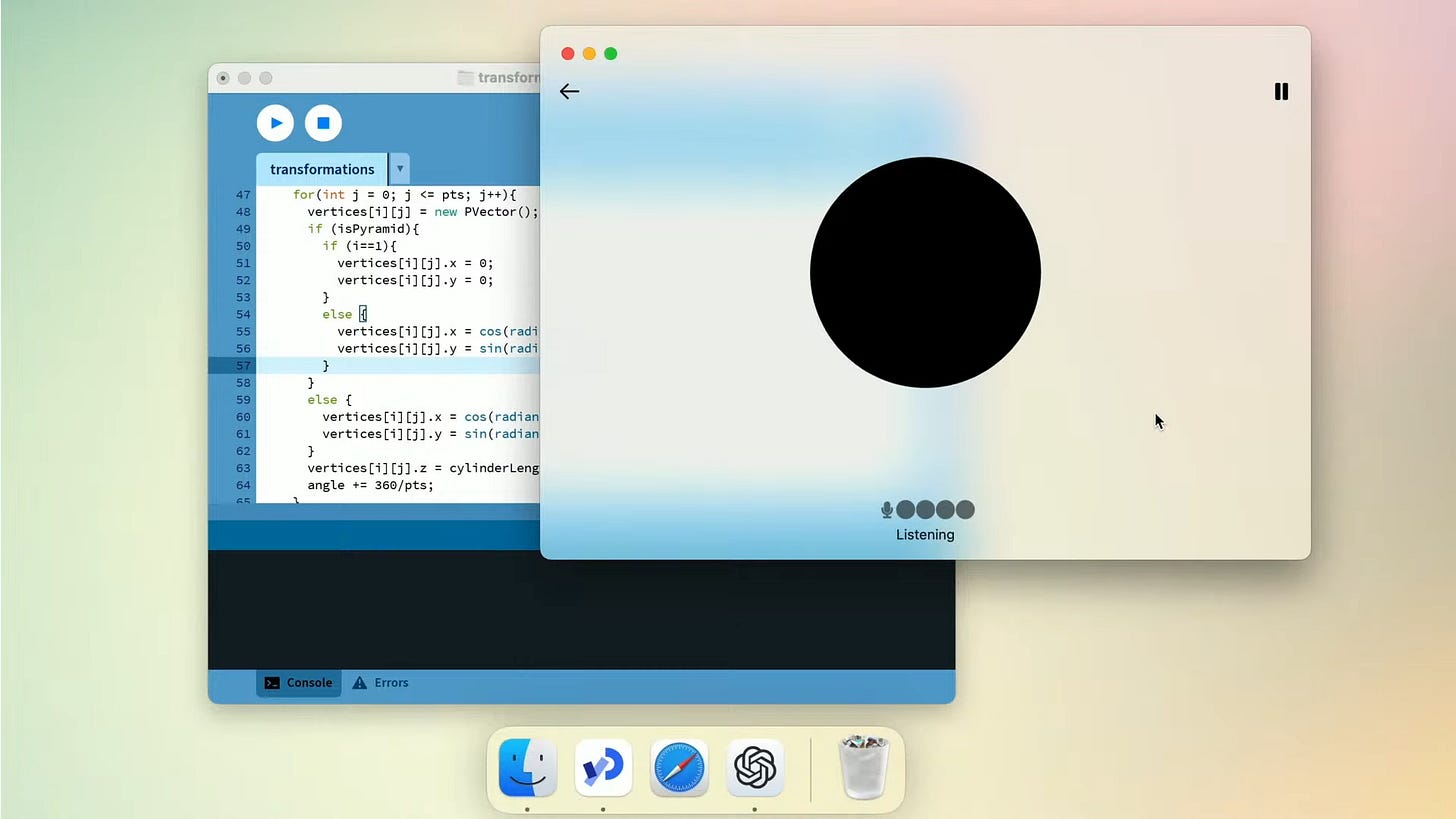

💻 Desktop version. Launching today, ChatGPT will have an easy and simple desktop app with a new UI. But I haven’t seen the desktop app available to download just yet.

🆓 ChatGPT set free. Free users were limited to ChatGPT 3.5, while ChatGPT Plus members, paying $20 a month, could access ChatGPT 4 with more up-to-date data. That’s changing starting today (again, it’s not live just yet), as ChatGPT-4o becomes free as do GPT Store tools. Paying members will still get 5x the capacity limits of the free users and likely first access to ChatGPT-5 soon.

😮 Drama alert. ChatGPT-4o can tweak its voice to add emotion. Research lead Mark Chen asked GPT-4o to tell a bedtime story and it began with a normal-sounding “Once upon a time...” Stopping the AI by interrupting with new prompts, he asked for more emotion, and then even more emotion, and each time the AI would raise and lower its voice pitch more. Then it was asked to do it in a robot voice, and it complied.

😫 Sensing your emotions. It also can pick up on your emotions. The demo here involved asking for breathing exercises and the AI noticed Mark Chen was basically hyperventilating instead of breathing in and out calmly. “Mark, you’re not a vacuum cleaner!” She asked him to adjust his breathing technique.

👀 Vision capabilities can analyze math on a sheet of paper (and offer hints instead of full answers if you’d like), solve complex coding problems via screen share (and explain them with plain language), and analyze and explain detailed charts.

💬 Real-time translation. Just about the only thing I liked in my Rabbit R1 review involved its simple translator tool, but AI in ChatGPT-4o goes further with a back-and-forth seamless conversation and natural-sounding voice. The demo was a quick one, so we’ll go hands-on with the new ChatGPT soon to confirm that it’s indeed better than the translator mode in our Samsung Galaxy S24 Ultra review.

⚙️ More ChatGPT-4o specs. OpenAI said that the new model can use memory to sense the continuity of all your conversations. Its API is also 3x faster, 50% cheaper, and has a 5x higher rate limited vs GPT-4 Turbo.

🤔 Where does that leave paying users? If ChatGPT 4o is launching for free, why would anyone stick with the $20/mo plan? According to OpenAI, paying members will get 5x the capacity limits of the free users. The company didn’t say this, but, likely, they’ll also get first access to ChatGPT-5 when it launches later this year.

Update: In a statement on X in the late afternoon, the company said: “All users will start to get access to GPT-4o today. In coming weeks we’ll begin rolling out the new voice and vision capabilities we demo’d today to ChatGPT Plus.”

I guess by cancelling the subscription you’ll lose access to DALL-E etc?